Using a Kubernetes Operator to Manage Tenancy in a B2B SaaS App

At Kolide, we recently launched our Kolide Cloud product, which is a Software-as-a-Service application that aims to help macOS administrators leverage the power of osquery to understand the security and integrity of the devices which they’re responsible for managing.

Now that Kolide Cloud is public, I’m excited share some details on how we build and operate Kolide Cloud. In this article, I want to focus on our multi-tenant deployment model and how we use a Kubernetes Operator to deploy and manage many single-tenant instances of our application. Most Operators reason about the lifecycle of a single instance of a service or application, but at Kolide, we’ve written an Operator to manage hundreds of instances of an application which is itself composed of several component services.

Once we’ve discussed the Operator and how Kolide uses it to deploy and manage software, we’ll briefly discuss how it’s built and some things that we’ve learned programming against the Kubernetes API in Go.

Application Tenancy

Companies that create products for other companies or teams often have to reason about how to deal with the tenancy of each team. The two ends of the spectrum are:

Deploy one monolithic application that handles multi-tenant data isolation via application logic

Deploy and proxy to many instances of smaller, more isolated single-tenant applications

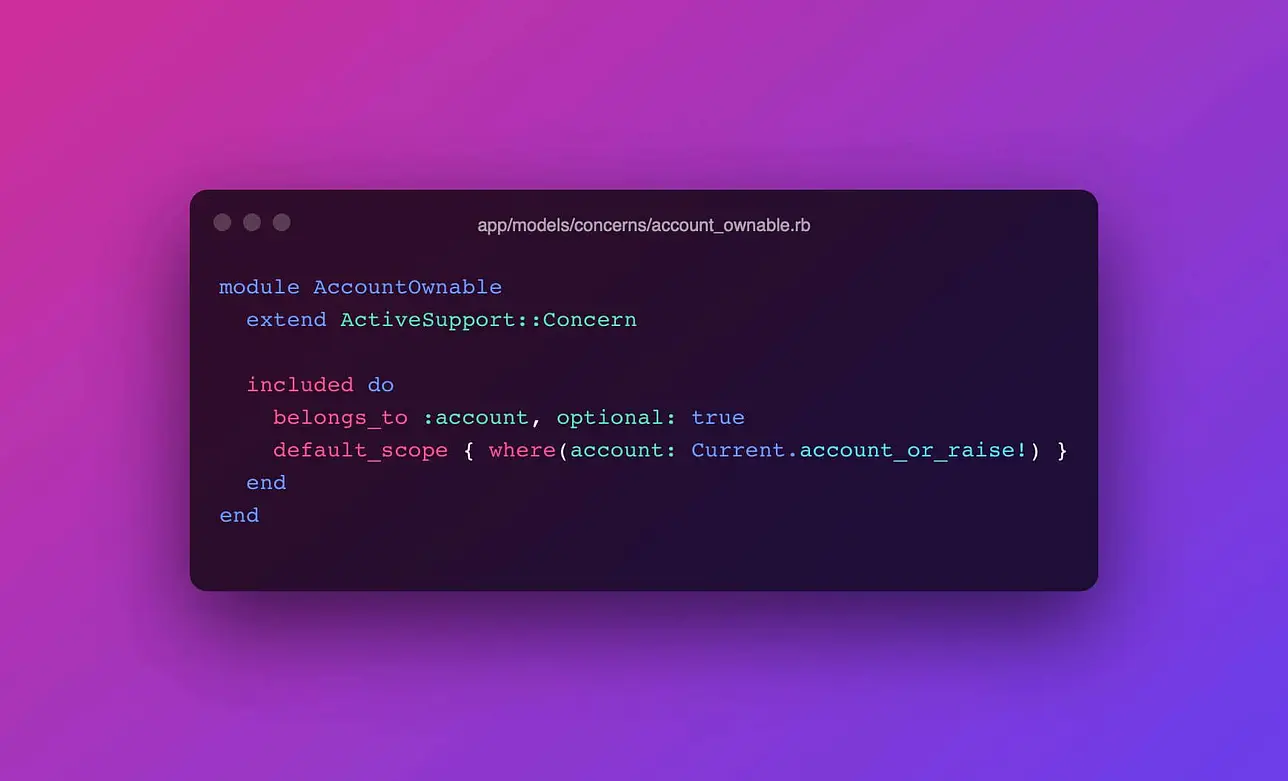

When faced with these two options, most companies choose to build the multi-tenant monolith. While endeavoring to find Product-Market Fit, we rapid-prototyped a monolithic Rails application which used the Apartment Rails Gem to isolate customer-specific data in PostgreSQL schemas.

As we on-boarded more and more beta customers, however, we reasoned about the following two facts that are somewhat unique to Kolide:

Kolide builds operating system security and analytics products, so the data we store for our customers is rather sensitive

An instance of our app may be used by 2–10 humans, but those humans may enroll thousands of devices which are effectively chatty API users

Because of this, product developers were spending an unfortunate amount of time reasoning about scalability and data isolation when they should have been able to focus more fully on creating features that add value for customers. As a result, we decided to remove tenancy from the Rails application completely and start deploying and independently scaling single-tenant instances of the app on Kubernetes.

Kubernetes Introduction

Kubernetes is a container-orchestration system that was created at Google as a open-source analog of their internal workload orchestration tool called Borg. If you buy-in to packaging and distributing software in containers, then tools like Kubernetes, Mesos, Docker Swarm, etc. are there to help you articulate how containerized workloads should be launched and managed, how these workloads communicate with each other, how deploys should work, etc.

Kubernetes Operators

The Operator pattern was introduced by CoreOS in 2016 to describe a way to embed operational knowledge into software. In the article, Brandon Phillips, the CTO of CoreOS, explains the pattern:

An Operator is an application-specific controller that extends the Kubernetes API to create, configure, and manage instances of complex stateful applications on behalf of a Kubernetes user.

It’s not always clear what exactly it means for a piece of software to be a “Kubernetes Operator”, but I define it as the combination of a Custom Resource Definition (CRD) and a Custom Controller which reacts to changes in instances of the CRD. In this article, I will refer to the Operator when I am describing the capability provided by the combination of the CRD and the Controller.

Custom Resources

In Kubernetes, “resources” are defined via some declarative configuration. Usually these configurations are articulated via yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

In this example, the resource is a Deployment. If you “apply” this resource,

Kubernetes will endeavor to run 3 replicas of the nginx:1.7.9 container.

Custom Resources allow users to extend the domain language of Kubernetes to

map more closely to their environment.

Custom Controllers

Tu Nguyen has written an excellent blog article on Kubernetes Controllers on the Bitnami blog. Further, the Kubernetes Documentation explains controllers in the following words:

In Kubernetes, a controller is a control loop that watches the shared state of the cluster through the API server and makes changes attempting to move the current state towards the desired state.

So if the set of Custom Resources is the “desired state”, then it is the responsibility of the Custom Controller to observe the actual state and endeavor to converge actual state and desired state by taking actions against the running system.

Prior Work: Multi-Tenancy Working Group

The astute reader may already be aware of the fact that there is a Kubernetes Working Group focused on Multi-Tenancy which is lead by David Oppenheimer (Google) and Jessie Frazelle (Microsoft). This working group deals with many different kinds of multi-tenancy and considers a wide variety of threat models. For a more complete overview of the work being done by this Working Group, I suggest David’s KubeCon Europe 2018 talk on Multi-Tenancy in Kubernetes.

At KubeCon Europe 2018, during the Multi-Tenancy WG Deep Dive, Christian Hüning gave a talk on a Tenant Operator that he is working on for his use-case of multi-tenant access to a Kubernetes cluster by potentially hostile users.

While related in several obvious way, the Operator that is described here is biased towards the deployment and on-going management of somewhat homogenous tenants that all come from the same distributor (Kolide Engineering). For our use-case, per-tenant data isolation is critical. Container and network isolation is important as well, but something we strive to improve over time. Resource metering is a non-issue for us because we don’t bill based on cluster utilization.

Kolide’s Tenant Operator

At Kolide, we have written an Operator which is responsible for managing the lifecycle of tenants in our Cloud product. This Operator intentionally embeds our domain and technology decisions into the software itself. The SRE team at Kolide endeavors to have the Operator manage as much of the daily operations of Kolide Cloud as possible.

The Tenant CRD

The main interface for interacting with the system is the “Tenant” Custom Resource Definition. Consider the following example of a Tenant CRD:

apiVersion: kolide.com/v1

kind: Tenant

metadata:

name: dababe

labels:

name: dababe

spec:

databases:

postgres:

- name: cloud

- name: kstore

mysql:

- name: fleet

email: mike@kolide.co

organization: Kolide Inc.

pgbouncer:

defaultPoolSize: 10

repos:

- name: cloud

container:

name: gcr.io/kolide-private-containers/cloud

version: cdac80a

varz:

ref: cdac80a

template: tools/k8s/cloud.template

varz: tools/k8s/varz.yaml

- name: kstore

container:

name: gcr.io/kolide-private-containers/kstore

version: 29ca464

varz:

ref: 29ca464

template: tools/k8s/kstore.template

varz: tools/k8s/varz.yaml

- name: fleet

container:

name: gcr.io/kolide-private-containers/fleet

version: 8acd61e

varz:

ref: 8acd61e

template: tools/k8s/fleet.template

varz: tools/k8s/varz.yaml

Each Tenant resource maps to a single customer’s instance of the application. This approach allows us to boil down a tenant to just the attributes that change on an ongoing basis, such as:

What databases should be reserved for each tenant?

What versions of each component of each tenant should be deployed?

How large should the database connection pool be?

Details like initial customer email and organization are included so that internal systems can reach out to customers during the account provisioning process.

Replicas are explicitly not managed via this CRD because we manage replicas via external systems that react based on observed resource utilization and customer size.

Control Loop

Whenever a Tenant resource is updated, the resource is queued for processing on a work queue in the controller. When a tenant is processed by the controller, the following general steps occur:

Create the Kubernetes namespace

Reserve and configure access to all required data infrastructure

Make sure that the relevant application secrets are available in the tenant’s namespace

Deploy the correct version of each component of the tenant

Apply network and container isolation primitives to secure workload execution

It’s important for each of these steps to be idempotent. It’s also critical for the happy path through these steps (ie: nothing changed since the last synchronization) to happen extremely quickly. A global cluster event (like a code deploy) may enqueue literally every tenant for processing. Our product is only just out of beta, but this is already several hundred tenants for us and growing every day as new customers sign up on our website.

Data Infrastructure

Right now, we manage data infrastructure by interacting with GCP APIs directly. Each tenant resource enumerates it’s own database requirements and the controller takes care of creating the requisite databases and user accounts. The controller will then save the per-tenant credentials in our secret storage mechanism which is described in more detail in the next section.

For future versions of our operator, we are actively exploring provisioning infrastructure services in a more generic way by taking advantage of the Open Service Broker API. The OSB API defines a specification for provisioning and binding to infrastructure services. The ecosystem around the OSB API is extensive and the folks that are working on adding Service Catalog support to Kubernetes are doing excellent work.

Secret Management

In Kubernetes, workloads can only access secrets in their namespace. Because we run each customer’s workloads in their own namespace, we must copy shared secrets into each tenant namespace. Further, each tenant has several unique secrets for accessing isolated data infrastructure.

Being somewhat loyal Google Cloud customers at the moment, we use Google Cloud Storage and Google Cloud Key Management Service to store secrets encrypted at rest. SREs and infrastructure services are granted access to encrypt and decrypt secrets in different environments as necessary using Google Cloud IAM. Since we use Kubernetes for literally everything at Kolide, we store serialized corev1.Secret blobs in GCS, encrypted at rest via KMS. It’s then easy for us to write tools which read secrets from GCS and unmarshal the secret into a Go objects. These tools are authenticated via GCP IAM rules and access can be easily audited and revoked as necessary.

The interface that we implement using the GCS and KMS APIs is as follows:

package secret

import (

"context"

corev1 "k8s.io/api/core/v1"

)

// Store is the interface which defines the controllers interactions with an

// arbitrary exo-cluster secret storage mechanism.

type Store interface {

Get(ctx context.Context, namespace string, name string) (*corev1.Secret, error)

List(ctx context.Context, namespace string) ([]*corev1.Secret, error)

Put(ctx context.Context, s *corev1.Secret) error

Delete(ctx context.Context, namespace, name string) error

}

While our exo-cluster secret storage solution provides an effective mechanism for initializing clusters, neither GCS or KMS are low-latency enough to be in the hot path of the control loop. In order to support hundreds, if not thousands of tenants, the happy path of a tenant control loop needs to happen extremely quickly if no changes are required.

To do this, we first synchronize secrets from storage in a dedicated namespace within the cluster. This namespace is kept up-to-date. We then use well-established Kubernetes API primitives to maintain a local copy of all secrets in the cluster. When synchronizing a tenant, the control loop will use this cache to avoid unnecessary network interactions with any external secret storage mechanism whenever possible.

Optimizing the efficiency of the control loop has largely been an exercise in fast, accurate, secure secret management.

Application Components

Each application component consists of a templated set of Kubernetes resources and a set of values to interpolate into the resources. Internally, we call our tools to apply values into resource template “varz”. Each component of a tenant is developed in it’s own repository and the Kubernetes configurations for that component are included in that repository.

The main reason why we include configurations with each repository is so that application configuration can be modified atomically in the same commit that modified application deployment requirements. For example, if a service adds a new required environment variable, the default value can be added to the environment-specific Kubernetes deployment configurations by the developer.

When we deploy tenant components, we specify the commit hash that we want to deploy. The control loop will then deploy a container built at that ref by applying configurations pulled from the repository at that commit. This makes it easy to atomically maintain configuration while enabling developers to own the configuration for their services.

We often get asked why we don’t use Helm for this use-case. While our resource templates are similar to Helm Charts, a few features of Helm make it unsuitable for our use-case. Notably, Helm requires a cluster service called Tiller which is an unauthenticated-by-default Cluster Admin service that installs software in a cluster. Since our controller is already a privileged service that can install software, the Tiller is a superfluous service which presents an unnecessary level of attack surface. Fortunately the design documents for Helm V3 describe a client-side model for installing Helm Charts and the Tiller is no longer a required component. Helm V3 sounds much more suitable for our use-case and we’re looking forward to trying it out once it’s available.

Writing Kubernetes Controllers in Go

I’ve previously written on the Kolide Blog about using Go for scalable operating system analytics. When it comes to writing software in the Kubernetes ecosystem, Go is hands-down the best tool for the job.

I feel there can be a high barrier to entry in learning to use Kubernetes. Many of the concepts can feel foreign and it’s not always clear why every line of yaml needs to exist. Jason Moiron, a well-respected Go developer, recently wrote an interesting article which asked if Kubernetes is too complicated.

In my personal experience, while using Kubernetes felt tricky at first, once I got the core concepts down, I realized that everything works together in a really predictable way that actually accelerates your ability to learn about and integrate new concepts.

Similarly, when programming against Kubernetes APIs, it can feel foreign at first to an experienced Go developer. In my experience however, once you learn the structure of the Go API client as well as how Kubernetes ecosystem projects organize themselves, it becomes incredibly easy to program against mainline Kubernetes APIs as well as external/custom APIs in a consistent, intuitive fashion.

Go API Client: “Client Go”

The Kubernetes Go API Client is maintained by the API Machinery Special Interest Group (SIG): https://github.com/kubernetes/client-go

The Go client has a really consistent look-and-feel for all types (Deployments, Pods, Namespaces, etc) in all API groups (core/v1, apps/v1, etc). For custom resources, there are code generation tools that allow users to generate API Clients from the struct definitions. Stefan Schimanski wrote a great introduction to Kubernetes API client code generation on the Red Hat Open Shift Blog.

Directory Structure

Most Kubernetes ecosystem projects follow a somewhat consistent directory layout. From the root of the repository, a controller will usually have the following directories:

cmd/foowill contain the binary entrypointpkg/apiswill contain the typed Kubernetes resourcespkg/clientorpkg/generatedwill contain generated client code for interacting with that project’s resources

One project that does a great job following the patterns of a consistent Kubernetes ecosystem project is the Ark disaster recovery tool, written by some really helpful engineers at Heptio. Given that Joe Beda, the creator of Kubernetes, is the CTO of Heptio, it’s perhaps not surprising that their code sets a good example. We use Ark to backup and provision Kubernetes clusters, but that is a story for another blog.

Other operators that follow these consistent patterns nicely in my opinion are:

CoreOS’s Etcd Operator

CoreOS’s Prometheus Operator

Kinvolk’s Habitat Operator

Repository Size

Dave Cheney has spoken about his opinion on how large a controller codebase should be and, at the time of writing, our controller is about 12,000 lines of Kolide-authored Go code. We’ve found that a codebase of this size is extremely manageable for us and we are not concerned about accommodating future growth effectively.

CoreOS’s Operator Framework

On my birthday this year, CoreOS released the Operator Framework, an open source toolkit designed to manage Operators in a more effective, automated, and scalable way. So far, the Operator Framework contains three components which help with:

Operator development

Lifecycle management

Resource Metering

For our use-case at Kolide, complicated lifecycle management and resource metering are not problems that we have, but one common problem that all controllers have to solve is the queueing and synchronized processing of resources as they change over time.

For most new controllers, I think that it probably makes sense to consider using the CoreOS library for this objective. If your use-case is sufficiently complicated or you just want more control, it may be worth using something like the official Sample Controller as an adaptable starting point instead.

Conclusions

Kubernetes has been described as a set of APIs for building distributed systems and as a “platform for building platforms”. We’ve enjoyed using Kubernetes to deploy, scale, and secure our product. We’re looking forward to continuing to discuss how we use Kubernetes to deliver Kolide Cloud here on the Kolide Blog.